- About MAA

- Membership

- MAA Publications

- Periodicals

- Blogs

- MAA Book Series

- MAA Press (an imprint of the AMS)

- MAA Notes

- MAA Reviews

- Mathematical Communication

- Information for Libraries

- Author Resources

- Advertise with MAA

- Meetings

- Competitions

- Programs

- Communities

- MAA Sections

- SIGMAA

- MAA Connect

- Students

- MAA Awards

- Awards Booklets

- Writing Awards

- Teaching Awards

- Service Awards

- Research Awards

- Lecture Awards

- Putnam Competition Individual and Team Winners

- D. E. Shaw Group AMC 8 Awards & Certificates

- Maryam Mirzakhani AMC 10 A Awards & Certificates

- Two Sigma AMC 10 B Awards & Certificates

- Jane Street AMC 12 A Awards & Certificates

- Akamai AMC 12 B Awards & Certificates

- High School Teachers

- News

You are here

A Selection of Problems from A.A. Markov’s Calculus of Probabilities: Problem 4 – A Simple Gambling Game

This is an example of what would later be called a Markov chain. It has a two-dimensional state space of the form \((x, y)\), where \(x\) is the fortune of player \(L\) and \(y\) is the fortune of player \(M\). States \((l, 0), (l, 1), \dots, (l, m - 1)\) and \((0, m), (1, m), \dots, (l - 1, m)\), are absorbing, meaning that once the chain enters one of these states, it cannot leave. The only transitions are from state \((x, y)\) to state \((x + 1, y)\), with probability \(p\), and to state \((x, y + 1)\), with probability \(q\), except when \((x, y)\) is absorbing. Ironically, when Markov started writing about experiments connected in a chain in 1906, his examples were nothing like this one.12 Even in the fourth edition of the book, which was published 18 years after he first wrote about chains, he still used the same approach as he did here.

Problems of this type were studied by Pierre-Simon Laplace (1749–1827), Daniel Bernoulli (1700–1782), and Viktor Bunyakovskiy (1804–1889), among others, long before Markov. However, these works were almost certainly not the motivation for Markov's development of chains.

|

Задача 4ая. Два игрока, которых мы назовём \(L\) и \(M\), играют в некоторую игру, состоящую из последовательных партий. Каждая отдельная партия должна окончиться для одного из двух игроков \(L\) и \(M\) выигрышем её, а для другого проигрышем, при чем вероятность выиграть её для \(L\) равна \(p\), а для \(M\) равна \(q=1-p\), независимо от результатов других партий. Вся игра окончиться, когда \(L\) выиграет \(l\) партий или \(M\) выиграет \(m\) партий: в первом случае игру выиграет \(L\), а в втором \(M\). Требуется опреледить вероятности выиграть игру для игрока \(L\) и для игрока \(M\), которые мы обозначим символами \((L)\) и \((M)\). Эта задача известна с половины семнадцатого столетия и заслуживает особого внимания, так как в различных приёмах, предположенных Паскалем и Ферматом для её решения, можно видеть начало исчисления вероятностей. |

4th Problem. Two players, whom we call \(L\) and \(M\), play a certain game consisting of consecutive rounds.

Each individual round must end by one of the two players \(L\) and \(M\) winning it and the other losing it, where the probability of winning for \(L\) is \(p\) and for \(M\) is \(q = 1 - p\), independent of the results of the other rounds.

The entire game is finished when \(L\) wins \(l\) rounds or \(M\) wins \(m\) rounds. In the first case, the game is won by \(L\), and in the second, by \(M\).

It is required to determine the probabilities of winning the game for player \(L\) and player \(M\), which we denote by the symbols \(L\) and \(M\).

This problem has been known since the middle of the 17th century and deserves particular attention, since distinct methods of solution proposed by Pascal and Fermat can be seen as the beginning of the calculus of probabilities.

Continue to Markov's first solution of Problem 4.

Skip to Markov's second solution of Problem 4.

Skip to Markov's numerical example for Problem 4.

Skip to statement of Problem 8.

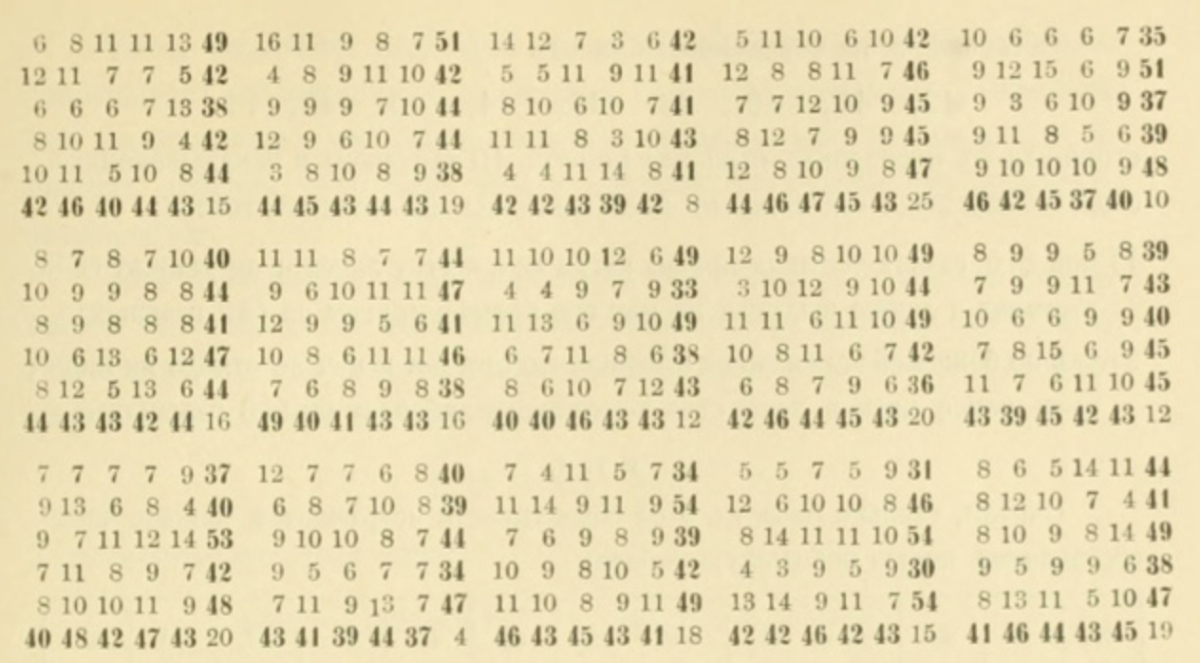

[12] In Markov's papers on chains, he restricted himself to chains with two states. His primary example was to consider the alternation between vowels and consonants in Alexander Pushkin's novel-in-verse, Eugene Onegin. Specifically, he wanted to determine if it is more likely for a vowel to be followed by a consonant or by another vowel. See [Hayes 2013] for more information on this work.

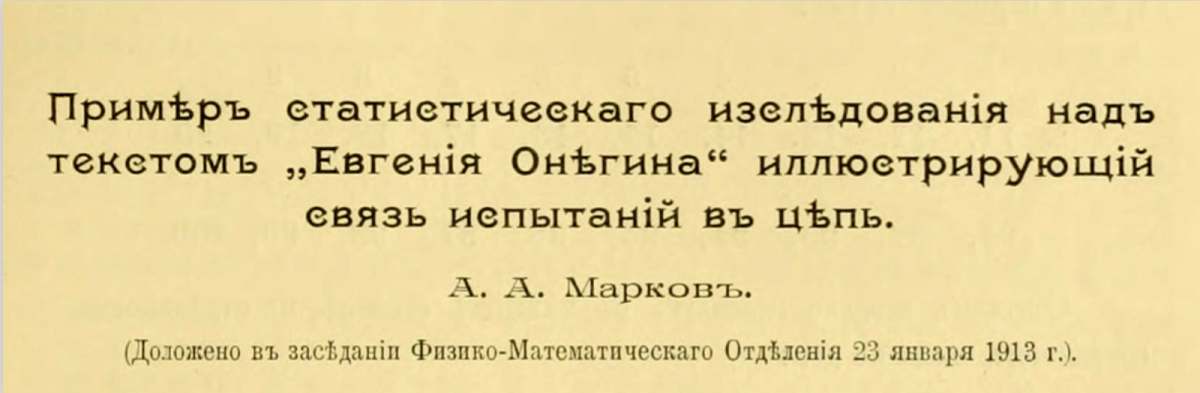

Figure 6. The title and part of Markov’s analysis of the frequency of vowels and consonants in

Eugene Onegin. Slides for “First Links of the Markov Chain: Probability and Poetry,” by Brian Hayes, 23 January 2013.

Alan Levine (Franklin and Marshall College), "A Selection of Problems from A.A. Markov’s Calculus of Probabilities: Problem 4 – A Simple Gambling Game," Convergence (November 2023)